We needed to setup a web host for the domain convert.ភាសាខ្មែរ.com for an upcoming project. It should have been simple, but this turned into quite the journey.

A very brief background on IDNs

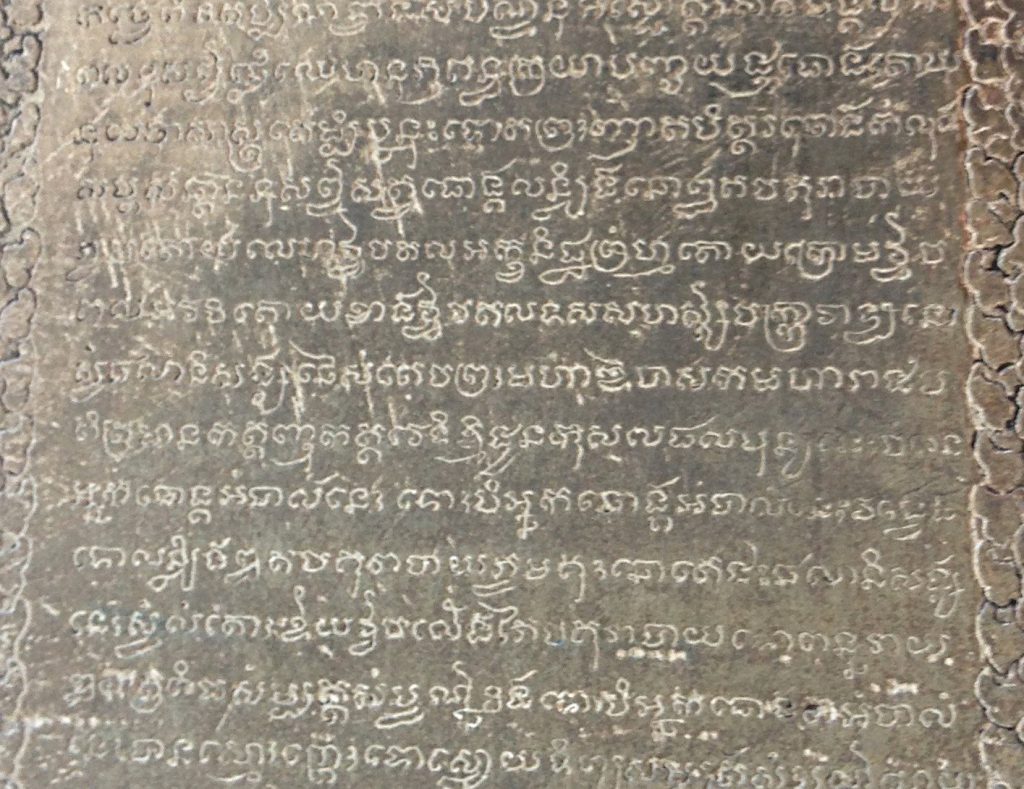

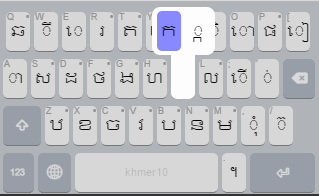

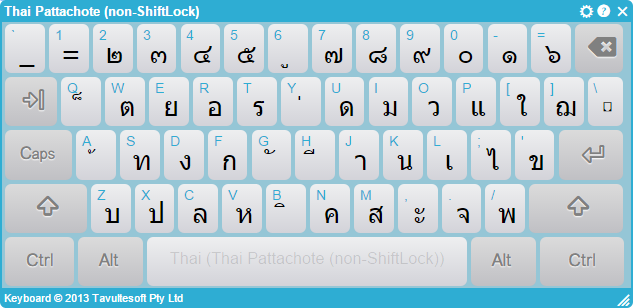

Some background. Notice the use of Khmer characters: ភាសាខ្មែរ /pʰiesaa kmae/ or “Khmer Language”. ភាសាខ្មែរ.com is a domain I’ve owned for some time, having purchased it when I was learning Khmer. Because this domain names uses letters not found in the English alphabet, it is considered to be an Internationalized Domain Name (or IDN). Now, behind the scenes, ភាសាខ្មែរ is not stored using Khmer characters in a Domain Name System (DNS) record. For reasons of history, domain names are restricted to a handful of Latin script letters, digits, and hyphen, and so the domain must be re-encoded using a system known as ‘punycode‘ (truly!)

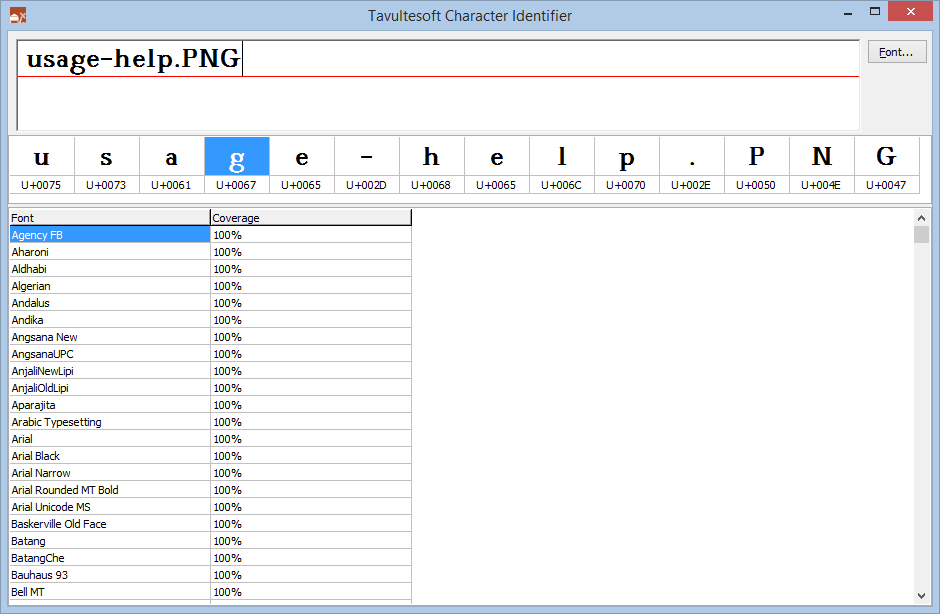

The punycode representation of ភាសាខ្មែរ is the rather bamboozling xn--j2e7beiw1lb2hqg.

Now, most of the time, this gory detail will be hidden from you in the user interface of your browser — but if you copy the URL from the address bar and paste it into a text editor, you’ll be presented with the gory details! (The domain punycoder.com allows you to easily play with other scripts and non-English-alphabet names and see how they are punycoded.)

We needed a backend host with Python for the project, which ruled out a lot of free options — particularly since we wanted to bind to this custom domain name. As I had access to an Azure subscription already, I went that way.

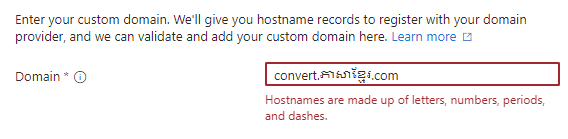

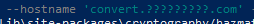

So first, I attempted to create the domain quickly in the Azure Portal web front end. I had no trouble creating https://khconvert.azurewebsites.net/ — took just a few seconds. Then I had to bind our domain convert.ភាសាខ្មែរ.com to this azure hostname. But computer said no:

The name convert.xn--j2e7beiw1lb2hqg.com is not valid.Fine, perhaps the UI needed the domain name as a Unicode string.

Hmm. Blocked again.

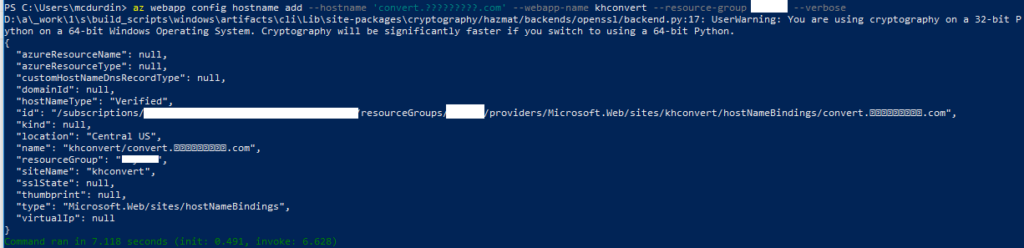

Now, often the Azure Portal does not allow you to do things which can be done via their APIs or with their az CLI tool. So I tried again with az.

PS> az webapp config hostname add --hostname convert.xn--j2e7beiw1lb2hqg.com --webapp-name khconvert --resource-group <REDACTED> --verbose

The name convert.xn--j2e7beiw1lb2hqg.com is not valid.

Command ran in 4.228 seconds (init: 0.493, invoke: 3.736)Okay then … perhaps I have to use the non-punycode version of the hostname? After all, that error in the Azure Portal was purely in the UI — it didn’t even call into the API.

And it worked!

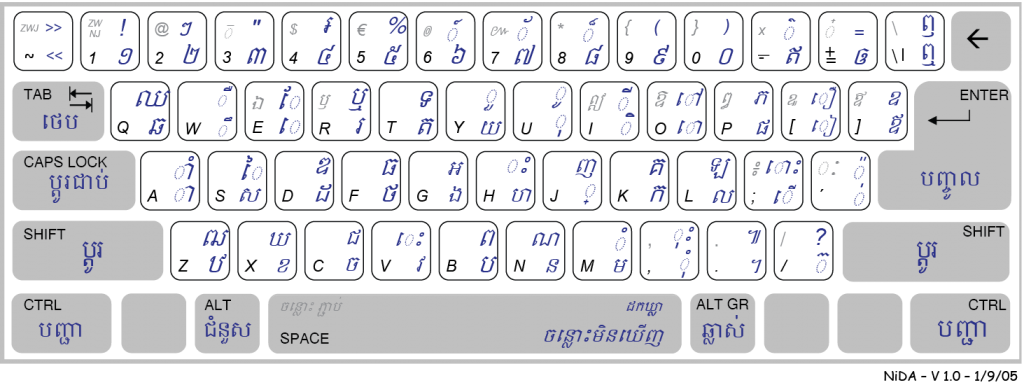

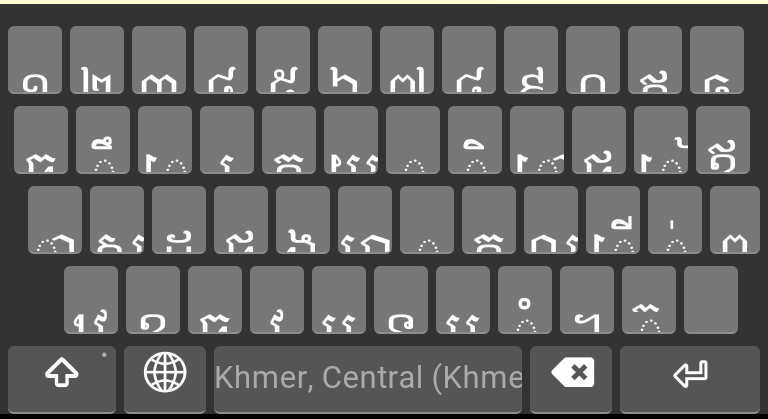

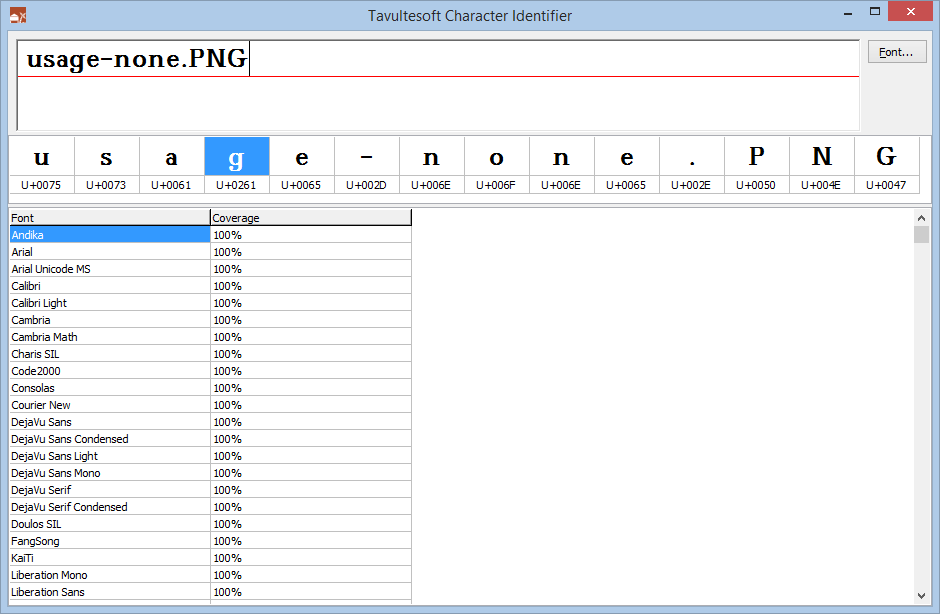

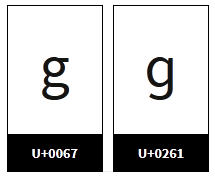

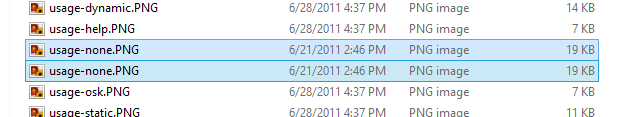

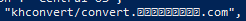

Side track: the old Powershell console did not do well with rendering Khmer in its default settings…

So it looks like those were not question marks? Interestingly, I can paste Khmer characters into the console, but I can’t copy them back out from the command — those letters copy out as question marks. Although as you can see from a screenshot and corresponding text later, I can copy the response and show it correctly. (Admittedly, I was using the old Powershell console, not Terminal, which probably would have none of these problems.)

But it worked, hurrah! We are done!

Well, not quite. We need a TLS certificate for the site. Fortunately, Azure supports creating certificates automatically for web apps.

But not for IDNs.

And not even the CLI saved me this time.

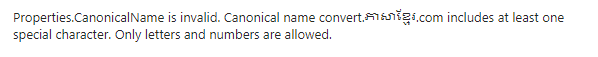

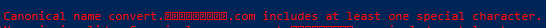

PS> az webapp config ssl create --hostname 'convert.?????????.com' --name khconvert -g <REDACTED> --verbose

This command is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

Operation returned an invalid status 'Bad Request'

Content: {"Code":"BadRequest","Message":"Properties.CanonicalName is invalid. Canonical name convert.ភាសាខ្មែរ.com includes at least one special character. Only letters and numbers are allowed.","Target":null,"Details":[{"Message":"Properties.CanonicalName is invalid. Canonical name convert.ភាសាខ្មែរ.com includes at least one special character. Only letters and numbers are allowed."},{"Code":"BadRequest"},{"ErrorEntity":{"ExtendedCode":"51021","MessageTemplate":"{0} is invalid. {1}","Parameters":["Properties.CanonicalName","Canonical name convert.ភាសាខ្មែរ.com includes at least one special character. Only letters and numbers are allowed."],"Code":"BadRequest","Message":"Properties.CanonicalName is invalid. Canonical name convert.ភាសាខ្មែរ.com includes at least one special character. Only letters and numbers are allowed."}}],"Innererror":null}At this point, I threw my hands in the air, put the domain behind a Cloudflare proxy and used their SSL certificate, and it just worked.

But it is no wonder there are so few IDNs. The experience is still frightful. The errors are weird. We have a long, long way to go.

(Oh, and do take a look at ភាសាខ្មែរ.com and convert.ភាសាខ្មែរ.com: the problems they are playing with have quite a lot to do with IDNs too!)