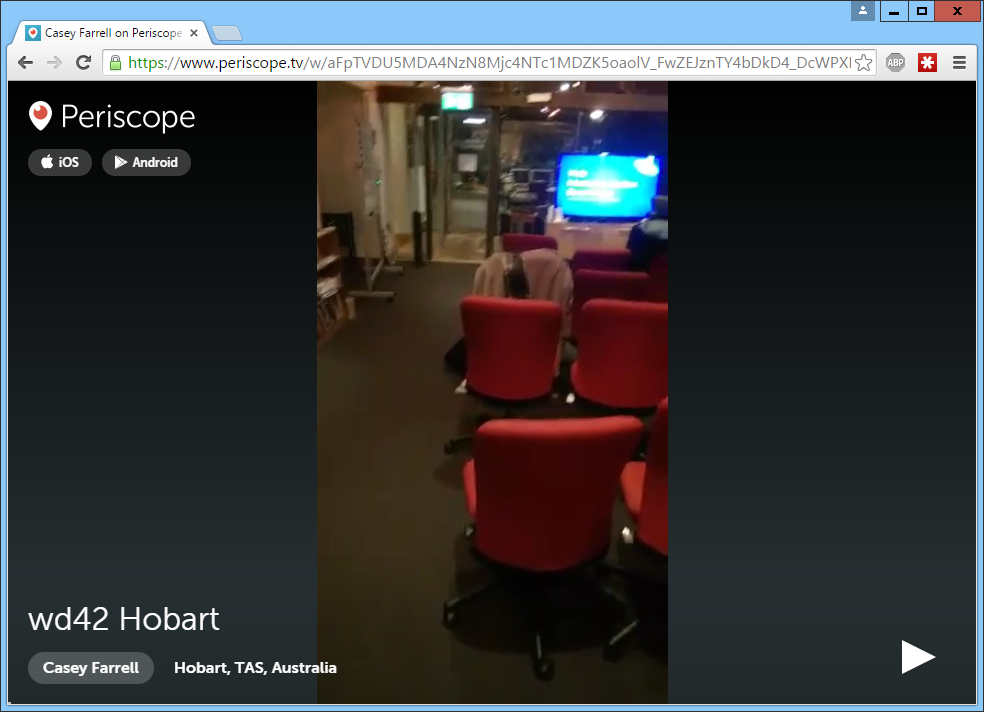

So, yesterday I gave a presentation (the title isn’t important, but it was called “Internationalization Done Wrong”) at WebDev42°, our local web developer meetup (42°S is the latitude of Tasmania). Before the presentation, I tweeted out to see if anyone was interested in doing a Periscope of the talk.

What is Periscope? It’s Twitter’s live video streaming platform, which makes it easy for anyone to live stream events, walks on the beach, or pretty much anything.

After the usual Twitteral conversation, Casey happily agreed to take on the job of cameraman.

https://twitter.com/kcidau/status/613510102415376384

And we were set to go. I told my friends on Twitter that I would be presenting at 6pm and would be experimenting with Periscope. A couple of them decided to try and watch.

Here’s what we learned.

The basic experience is really smooth

The premise of Periscope is anyone can host a live stream. That’s definitely true: setup was trivial and getting started was a matter of pressing the Broadcast button. And following the stream on other devices also worked pretty well. At least for mobile devices; not so much for desktops…

People will watch immediately

All sorts of strange people started watching, making helpful comments like “that guy has no hair” and “how come so many of you have beards?” and weird questions about Tasmania.

That strangers came to watch frankly surprised me, because we were a bunch of computer geeks talking about computer geekery, and that’s not really that interesting.

Keep your periscopes focused

I tweeted a link to the Periscope and a couple of my friends jumped on to watch almost immediately. This was unfortunate, because they ended up being put through 20 minutes of talk about beer and hair before the presentation started.

So start a periscope for general chat before the main event if you like, but start a new one for the presentation.

Turn off notifications and all the jazz on your phone

Notifications are seriously loud for the poor viewers of the video!

Talk loudly

I apparently talked too quietly for some of the periscopees (aka viewers).

Take your finger off the microphone

Yep. That’s an effective way to mute the stream. Speaking from experience here. (Suggested by @johndalton).

Name your periscopes

It wasn’t clear to people jumping into the stream what the event was all about. The name should probably have been “Web Dev 42 South Meetup – General Chat” for the first three periscopes (more about that in a moment). Then a new periscope should have been started for each presentation given.

As it turned out, a new periscope was started for each presentation, kinda, but not on purpose (learn why soon). And I only ever shared the link to the first periscope, so my friends were left trying to find the new streams (some of which ended up coming from different users as well).

And locate your periscopes

Updated 10:50pm: As Masni wrote on Twitter, turn on location so people can find your streams.

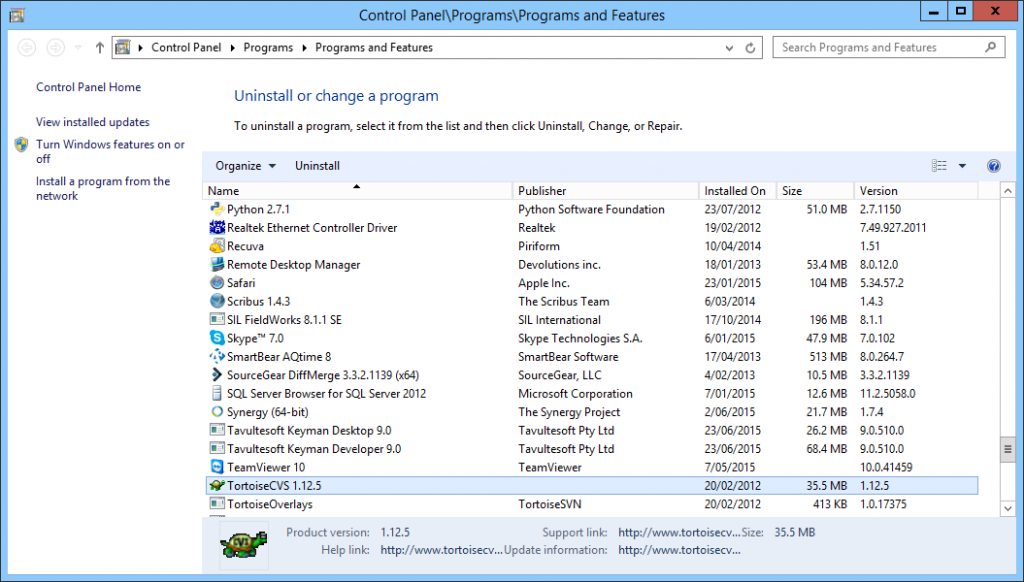

The Android client is kinda buggy

Yeah, that’s why we had three periscopes even before the event officially started. It’s because the Android client kept crashing. We finally switched to an iPad, but not before losing the last few minutes of my talk. (Not a big loss, to be sure!)

The iPad was much more stable.

The cameraperson probably needs to be an extrovert

So Casey is really not an introvert, which is good, because I would be too shy to run around filming people. He did a good job of that, even through all the chaos.

Share your periscopes

I got kinda busy after tweeting out the first link, so I didn’t really realise that the app had crashed and that everyone was having to find a new stream to follow. It definitely made things more complicated. If you are going to be doing a presentation, try and get someone else to look after tweeting out live things like that on your behalf. They probably won’t steal your phone or tweet anything too embarrassing.

Quality is variable

Because it’s a live stream, little network hiccups do sometimes happen. Audio disappeared for something like 30 seconds on one stream (no idea why). The video quality is not awesome but it’s certainly watchable. It’s like Youtube 2003 (was Youtube around in 2003?)

So all those little things aside, the general experience was still pretty cool. Definitely an easy way to share a video!

After the event

After complaints from my friends and rude comments from the audience, I realised I wanted to review my presentation efforts (i.e. the video) online. This ability to watch a saved stream later was one of the key reasons I chose to try Periscope over Meerkat (a very slightly older competitor).

How did that go?

The post-event experience is pretty minimal

Even though streams are saved so you can watch them asynchronously, the experience is pretty minimal. There is precisely one control: the play/pause button. That’s right, no fast-forward, no rewind, no skip, no ability to move to a specific point in the stream.

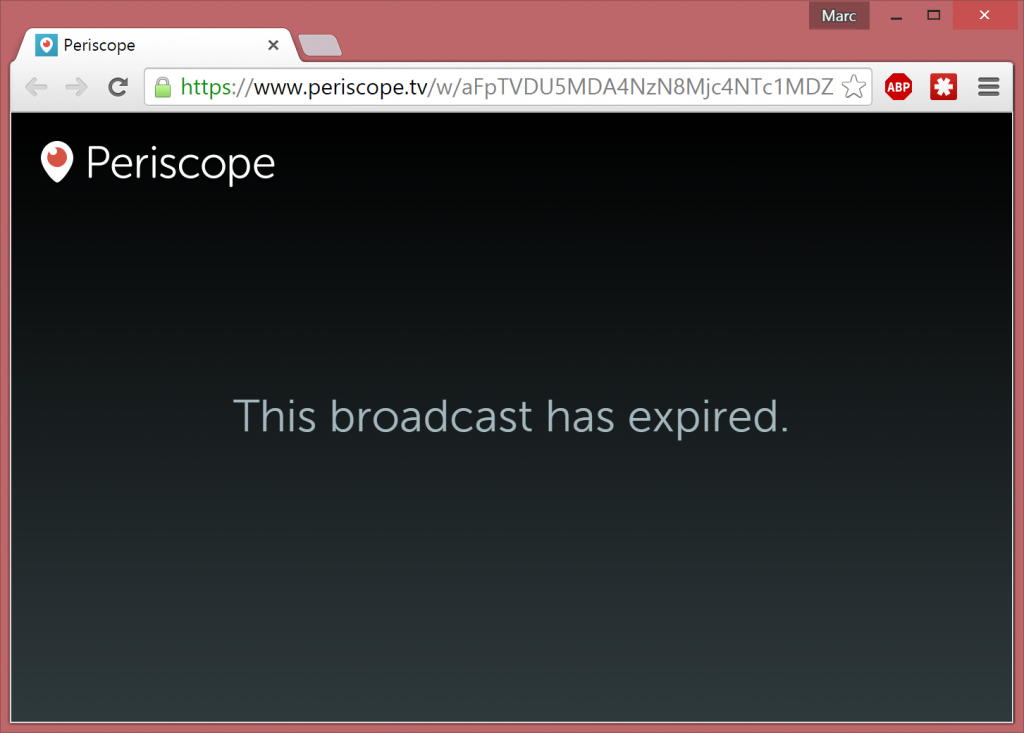

I was faced with watching 20 minutes of discussion about beer and hair just so I could review my presentation!

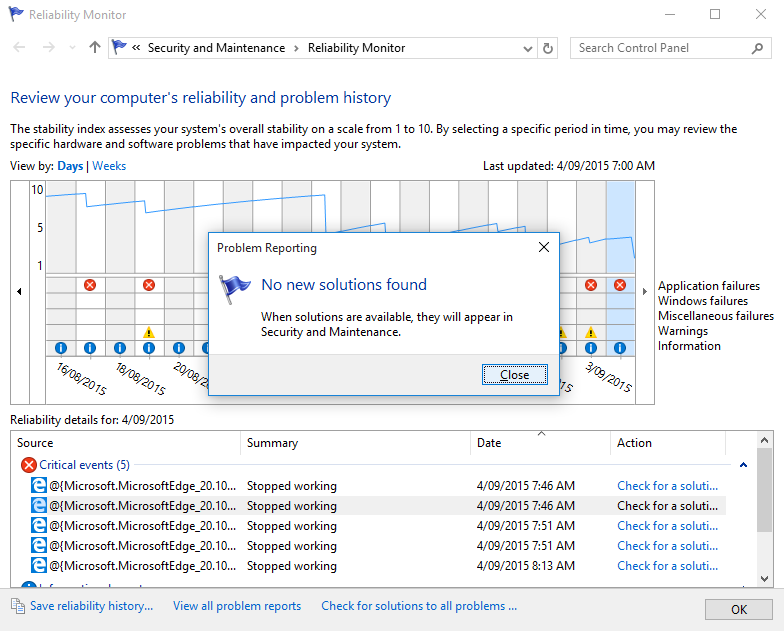

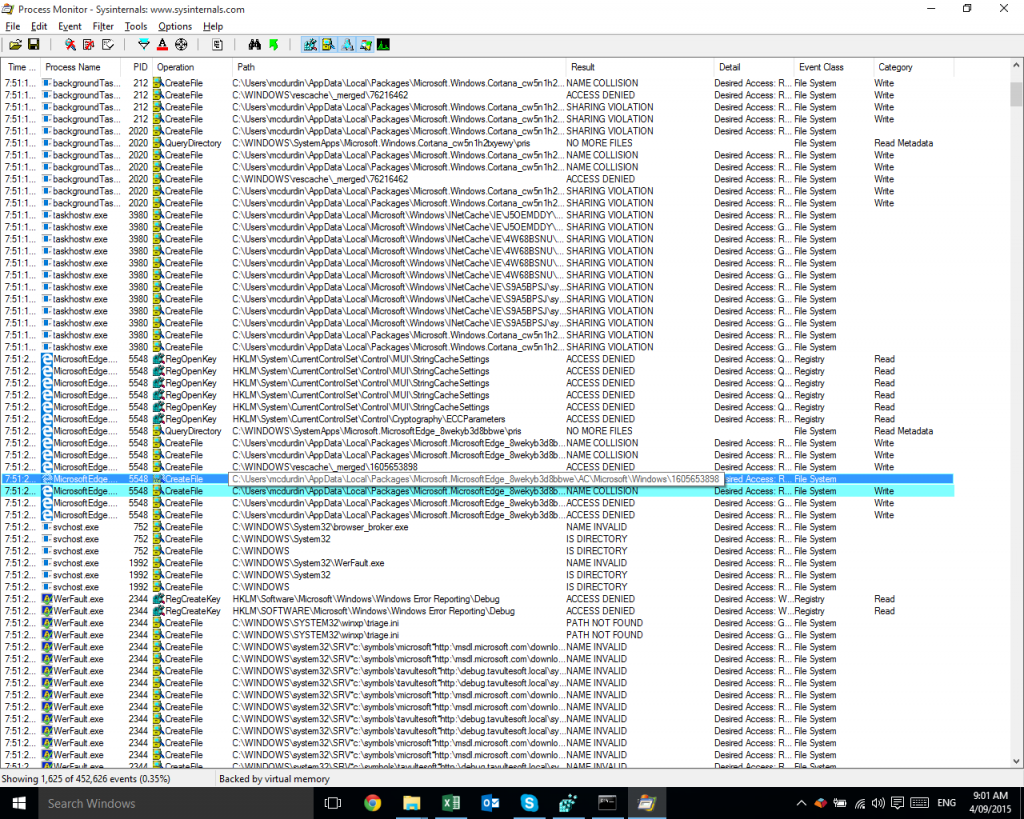

The website is a bit buggy

I frequently had trouble starting streams, and more than once a stream would fall over after a few minutes – and the only way to resolve this is to start again with a refresh (ouch!)

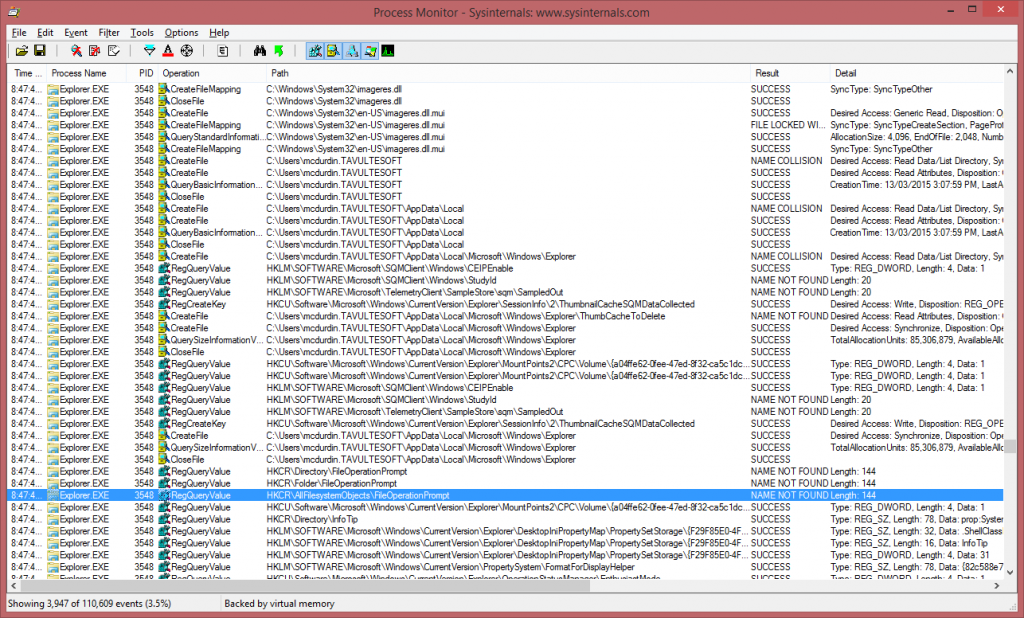

Looking at the situation with the Web Developer console, it appears that stream data requests were sometimes being denied with 403 errors. I didn’t dig into why, but that’s a bit of a deal-breaker.

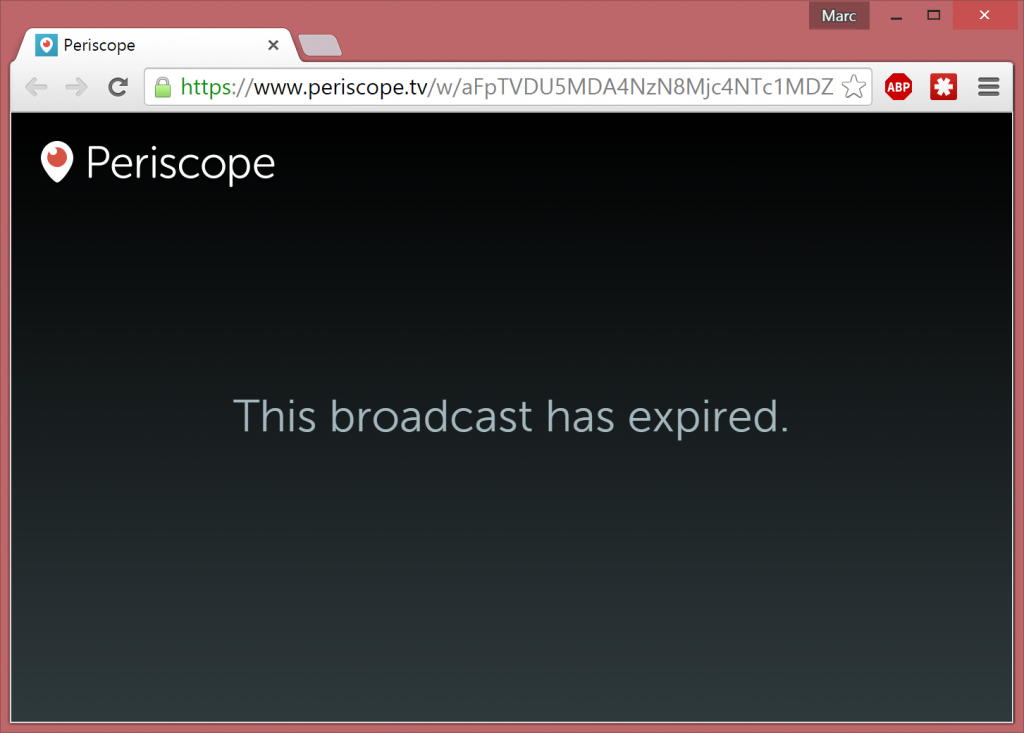

You can only watch for 24 hours

After 24 hours, the public link is gone.

Now, you can save the stream onto your device, if you (a) remember to do that, and (b) have enough storage left. You can choose to have all streams saved by default, which does mean that point (b) would quickly become a truism!

Saving a Periscope Stream from the website

I still hadn’t managed to get further than 4 minutes into my presentation without the site throwing an error. So, after refreshing, and realising I’d have to sit through three minutes of pre-talk setup yet again, because no fast forward, remember (this was like the 4th periscope start after the Android crashes, so I could at least skip the first 17 minutes), I gave up on watching online. Instead, I decided to try and find a way to save the stream.

And I found my way through it! 🙂 And if I can do it, I’m sure you can.

Caveats apply here. You may not have permission to copy a stream because it is copyright and all that. Be good. They may change the website back-end and you’ll have to adapt with the changes. Remember this will only work in the first 24 hours after the event.

So here’s how you do it. I haven’t automated this (much) because that’s for someone else to do, later.

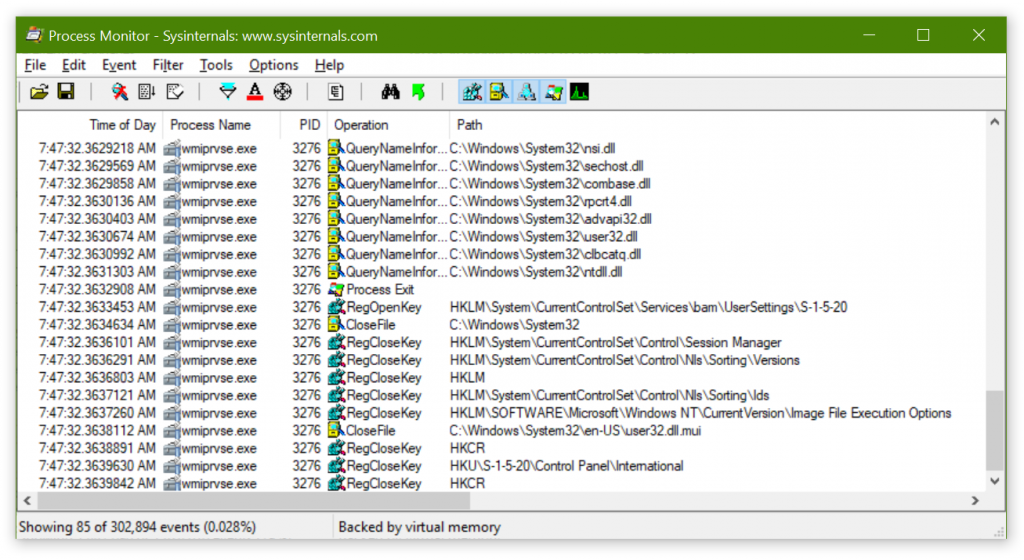

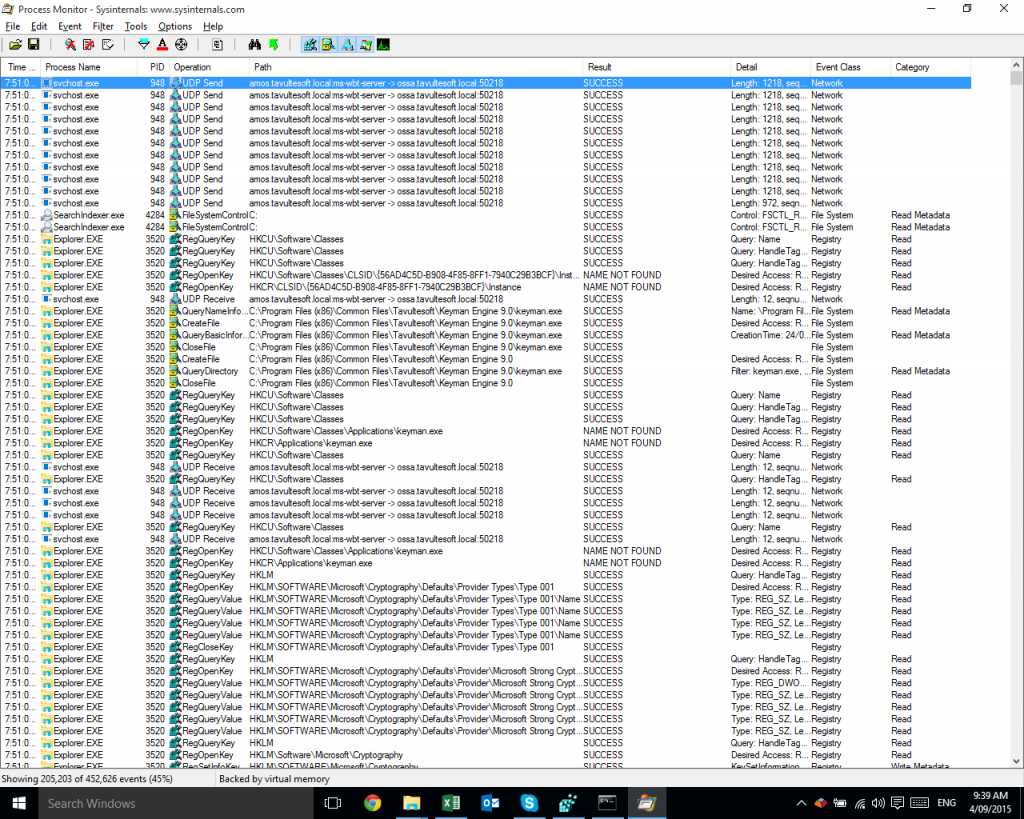

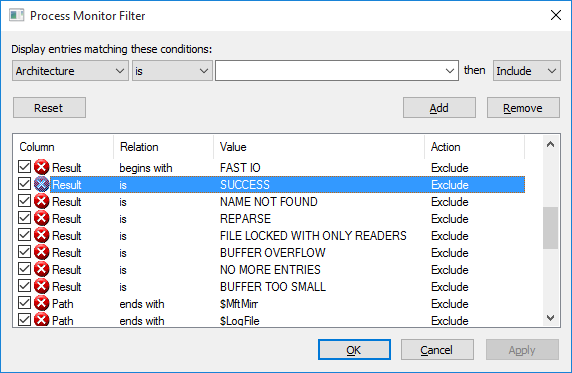

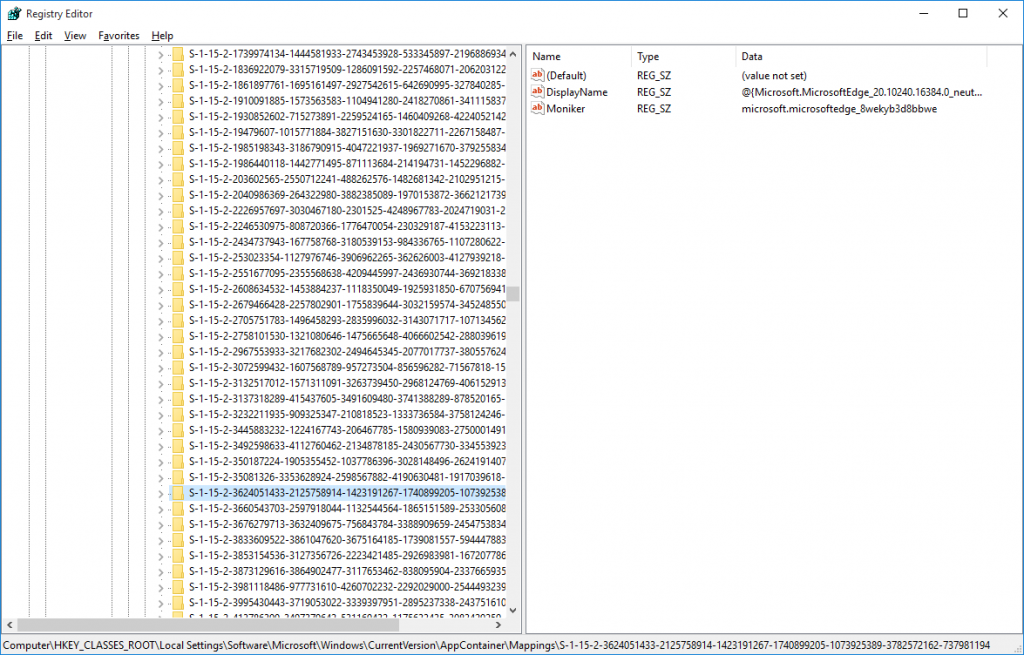

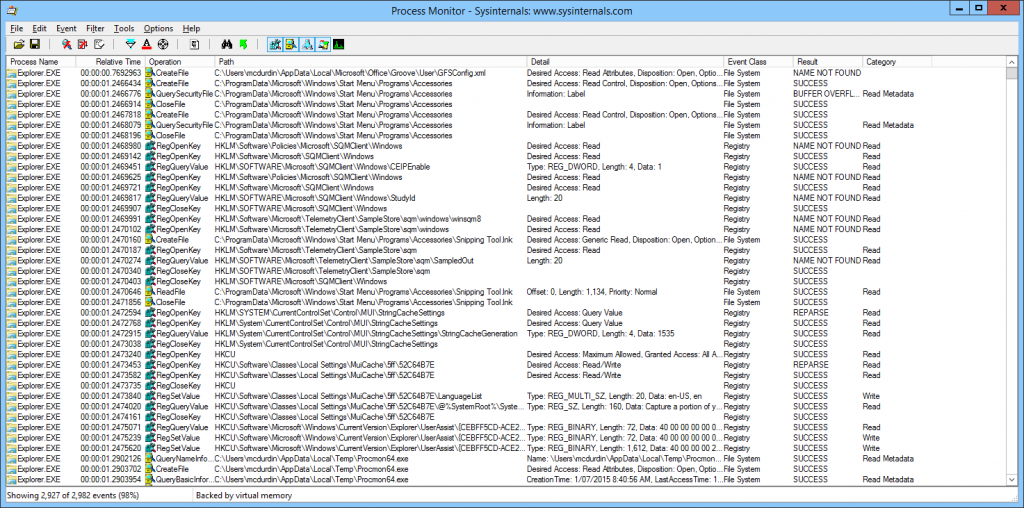

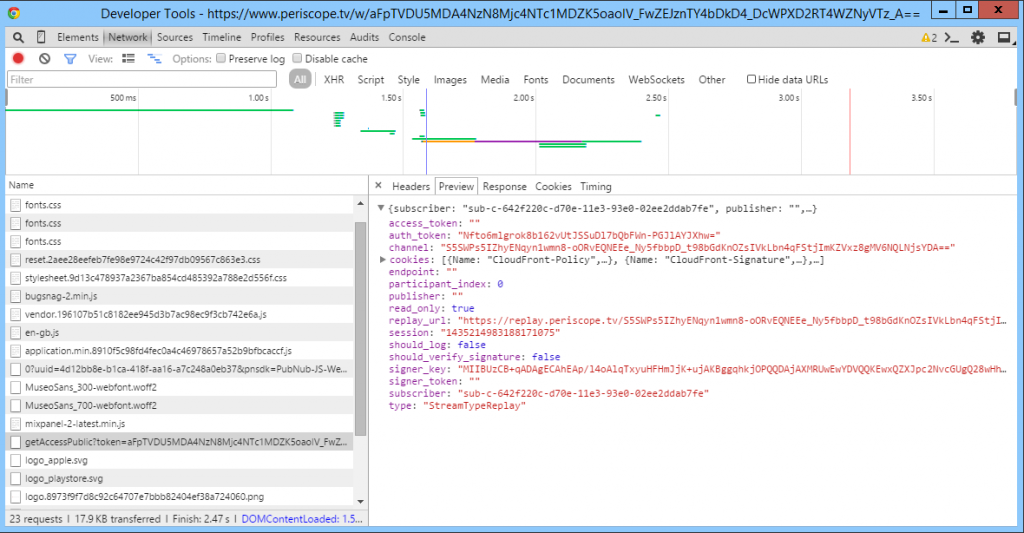

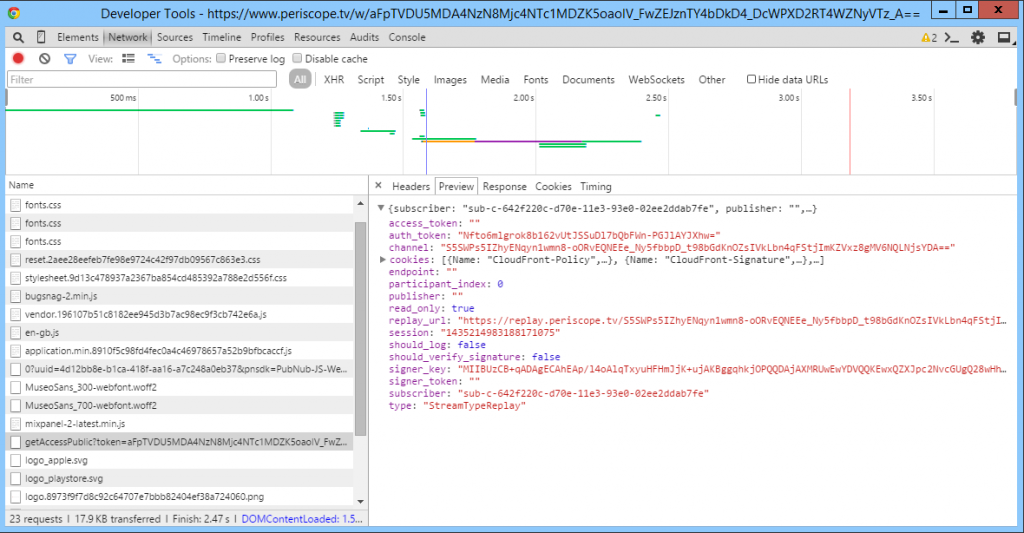

Visit the Periscope URL with Web Developer open.

I used Chrome but you can use pretty much any browser. We want to capture the network traffic to find the access token for the stream, so we can grab it with a tool.

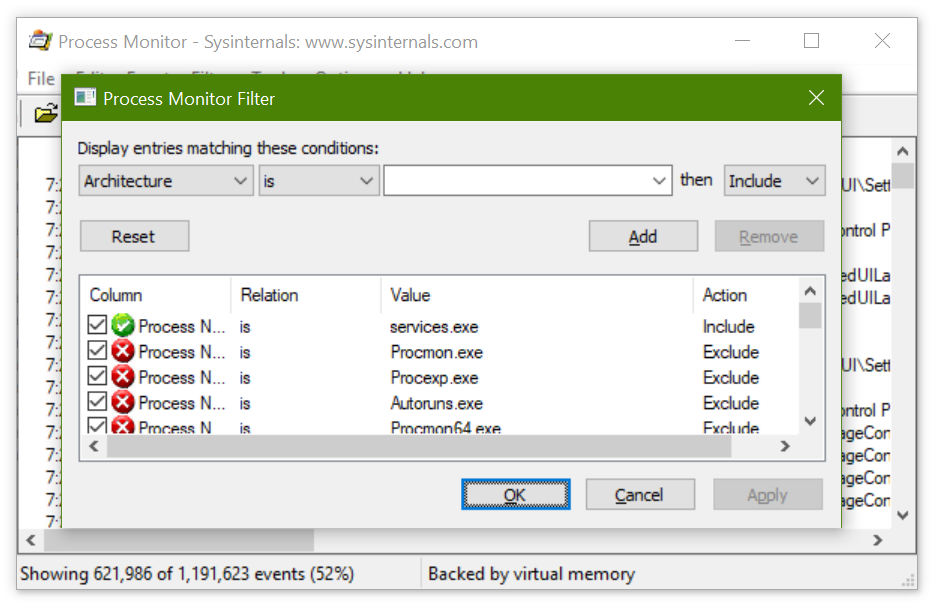

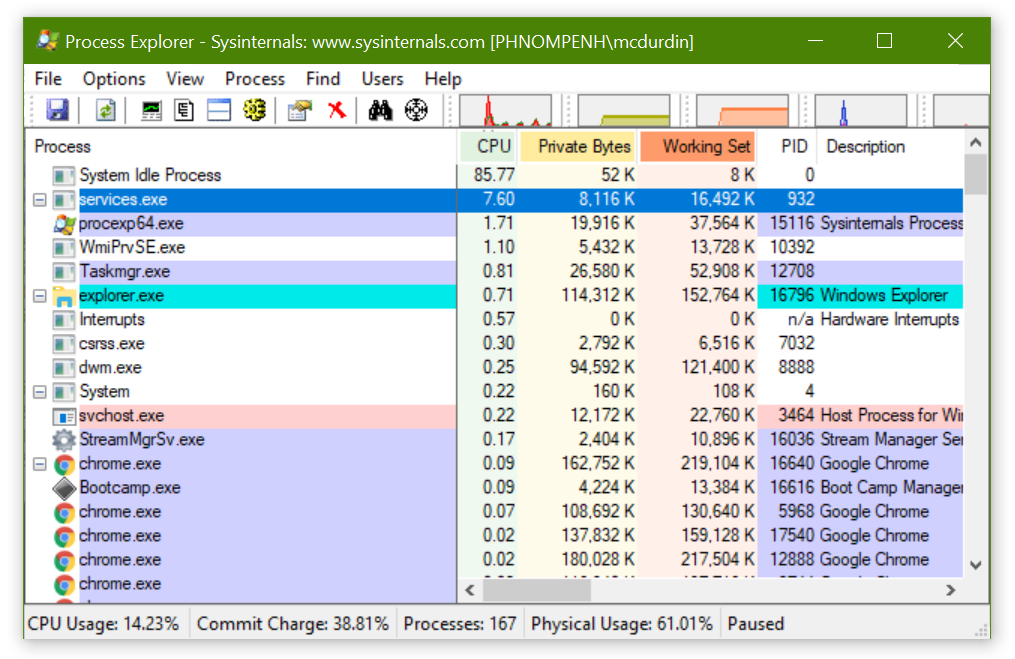

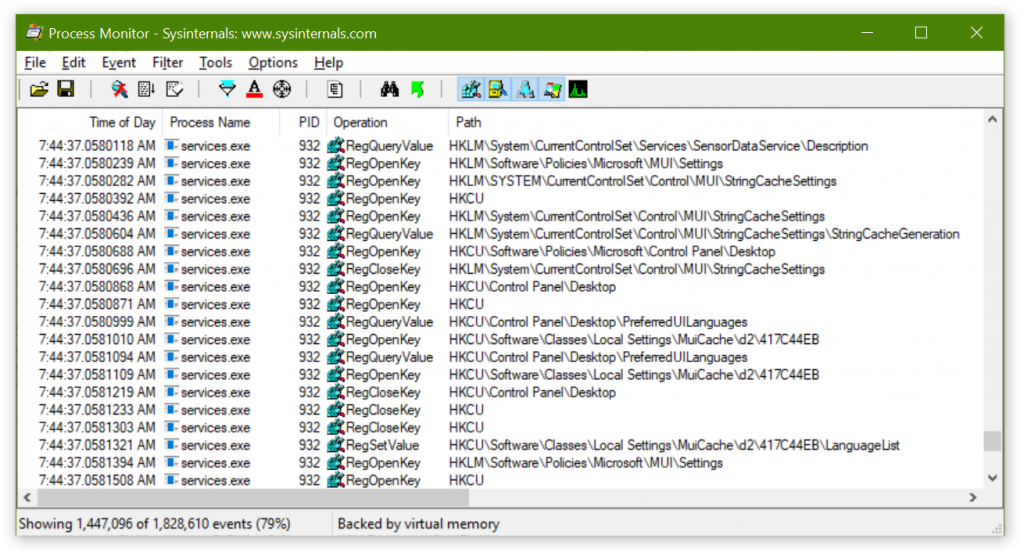

Once the page loads, look for the getAccessPublic request, as shown below.

We’re going to want two different things out of that.

- The replay_url, shown most easily in the Preview pane. Copy it to the clipboard and paste it into a document for later.

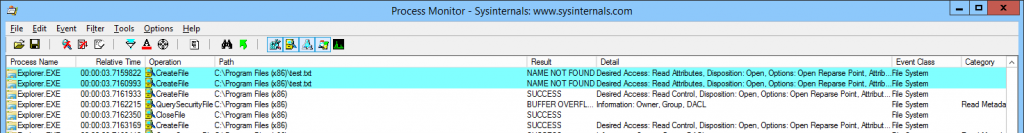

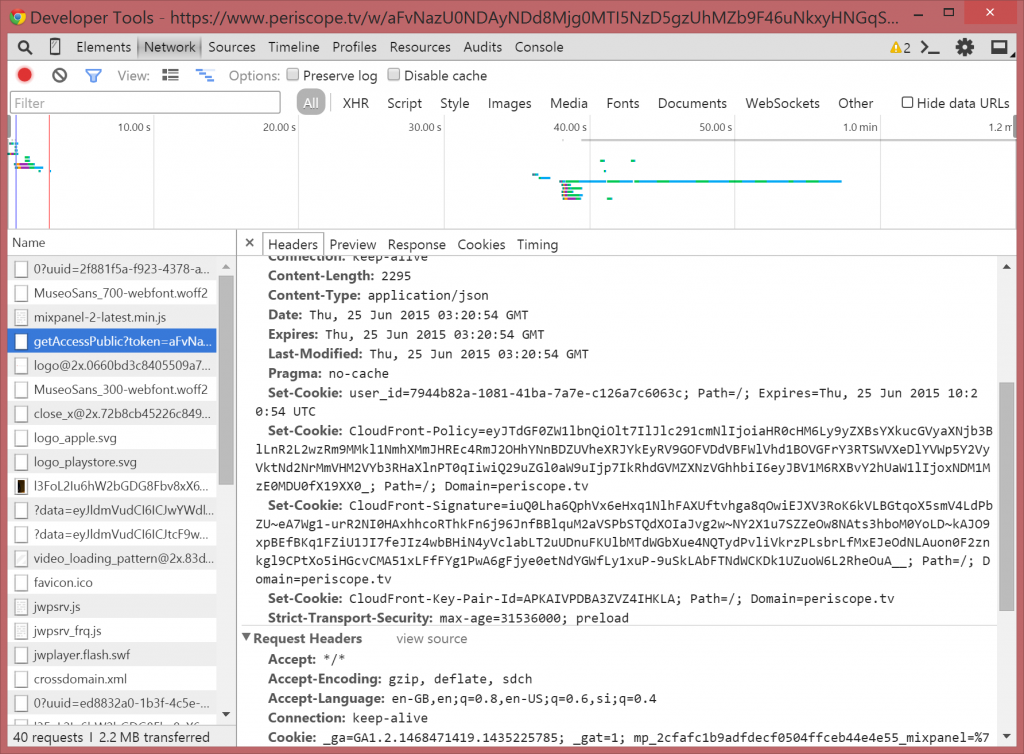

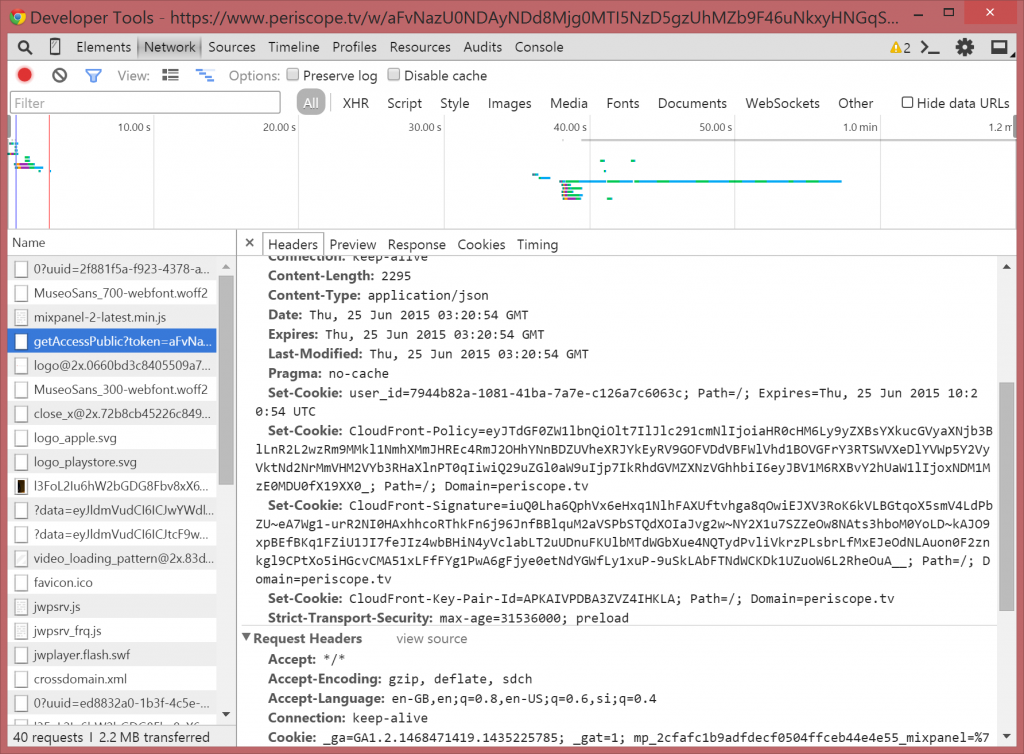

- And the cookies. These are easier to copy from the Headers pane (Sorry, this is a later screenshot, but the principle still applies.).

You’ll need to copy the value of each Set-Cookie header, up until the first semicolon (;). Don’t include the “Set-Cookie” text itself. Paste these into a text document, separating the strings with semi-colons. Don’t add line breaks.

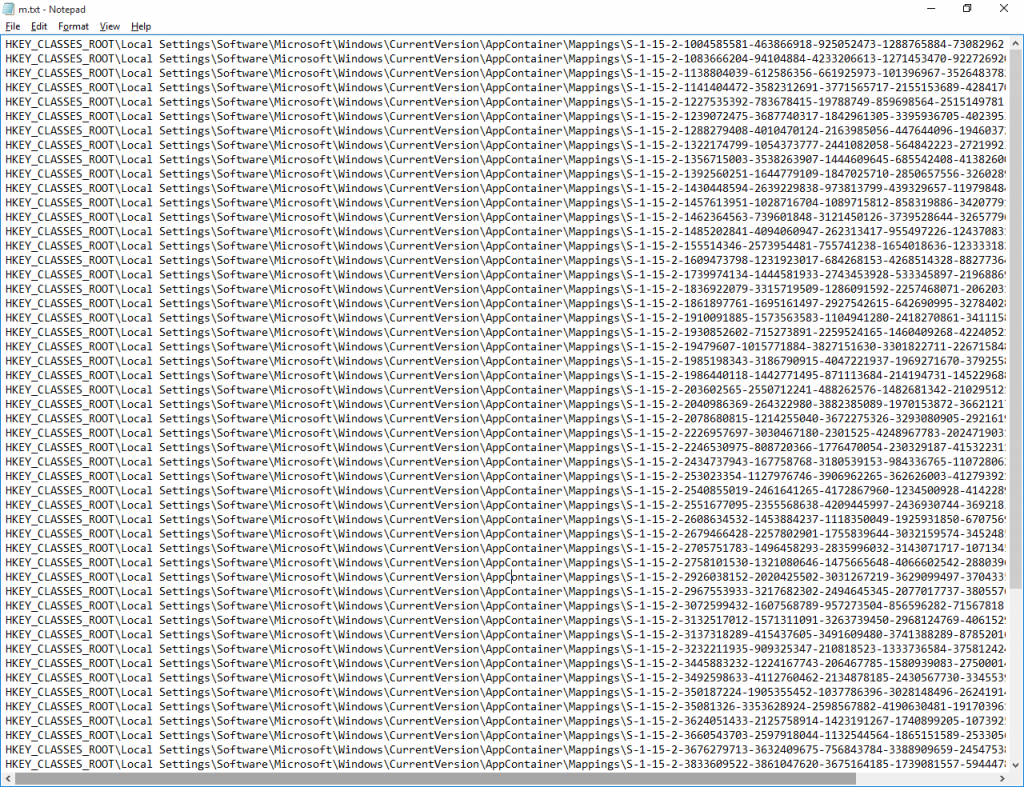

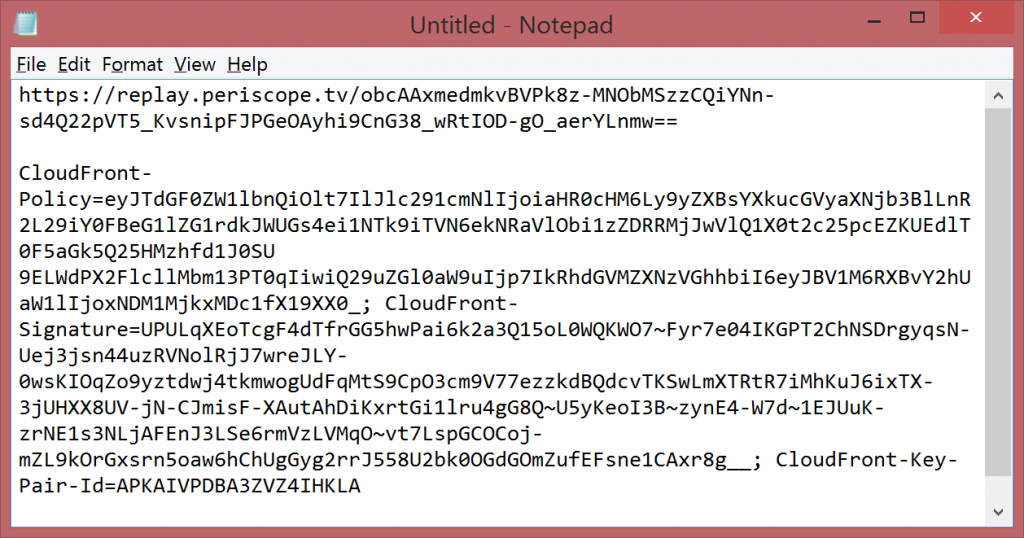

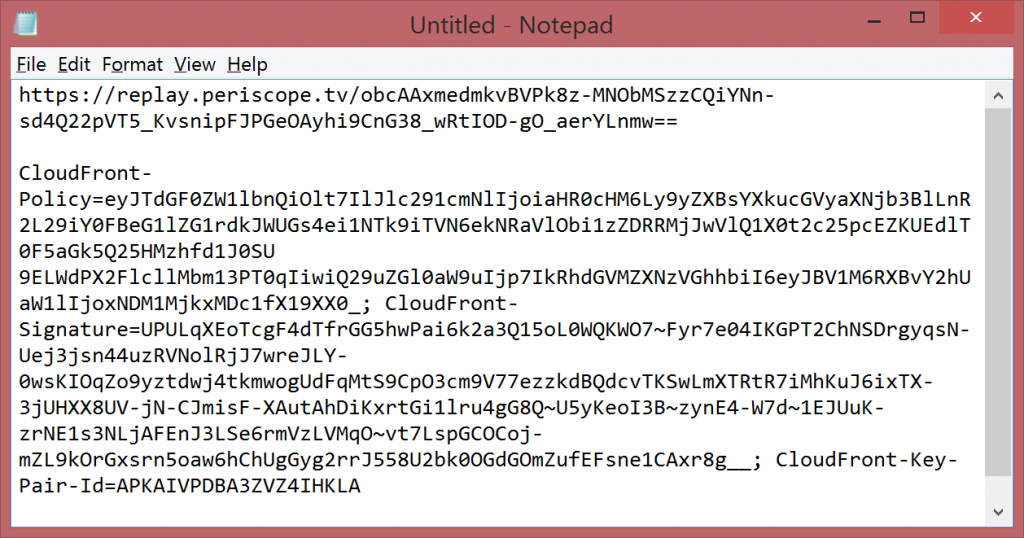

When you are done, you should have something like this (no line breaks, just automatic wordwrapping showing here):

The replay url will point to a path on a web server that contains a m3u8 format playlist file, and a set of MPEG-2 TS video files, which represent your video stream broken down into chunks.

Create a batch file to download the video

Now, we want to pass those variables you collected into a batch file, for simplicity. I used the tool wget to download the files from the command line.

Here’s the batch file code.

@echo off

set cookie="Cookie: <your-cookie-text-here>"

set url=<your-replay-url-here>

wget --no-cookies --header %cookie% --no-check-certificate %url%/playlist.m3u8

findstr "chunk" playlist.m3u8 > downloadlist.txt

for /f %%i in (downloadlist.txt) do wget --no-cookies --header %cookie% --no-check-certificate %url%/%%i

Replace the <your-cookie-text-here> and <your-replay-url-here> placeholders with your variables collected earlier. This script will download the playlist.m3u8 file, using the correct access permissions, and parse out the chunks of your video from that (pretty simple) file format into a download list. Then, it goes through the download list and downloads each chunk. Pretty straightforward.

(Why –no-check-certificate? Because the default root certificate list that comes with wget is out of date!)

Save and run the batch file.

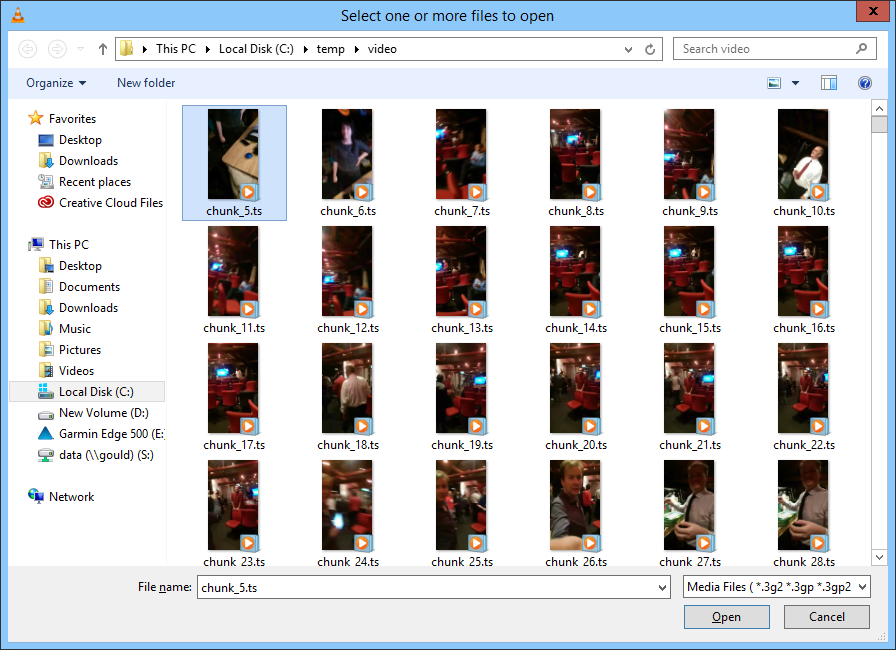

It may take a little while to run, but after a bit, you’ll have collected all the different bits of your video for posterity onto your machine. The whole download took about 15 minutes on my 100mbit NBN link, which suggests that Periscope may be limiting the bandwidth of each user. No biggie, I went to lunch anyway.

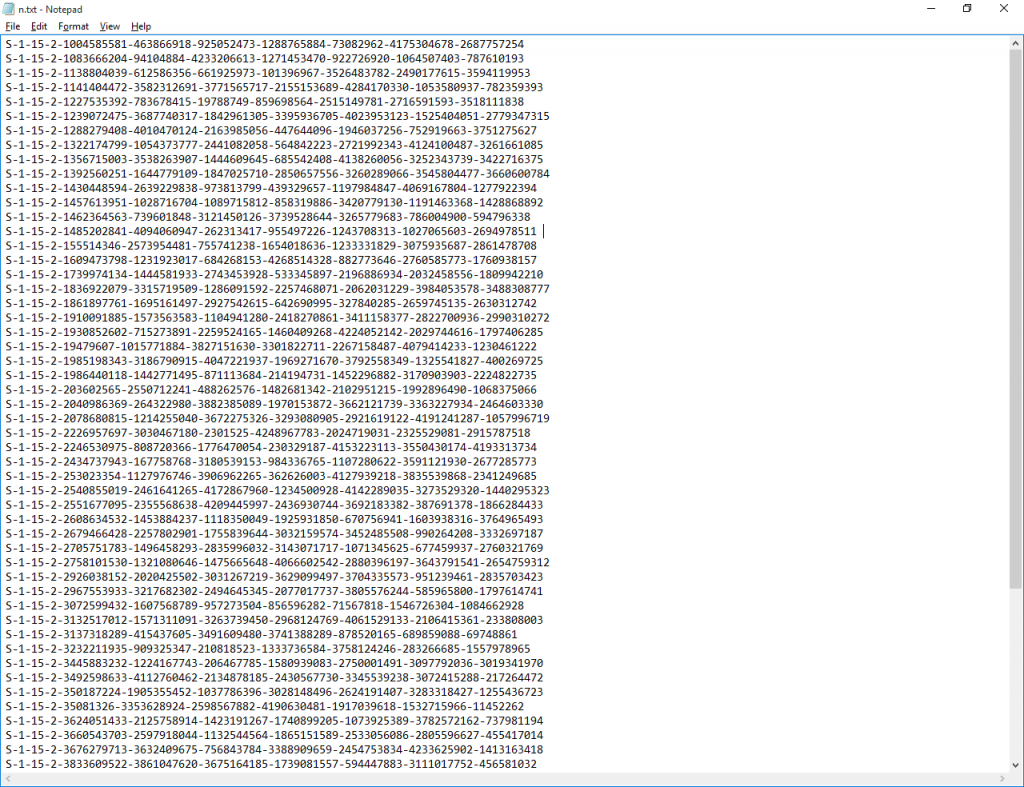

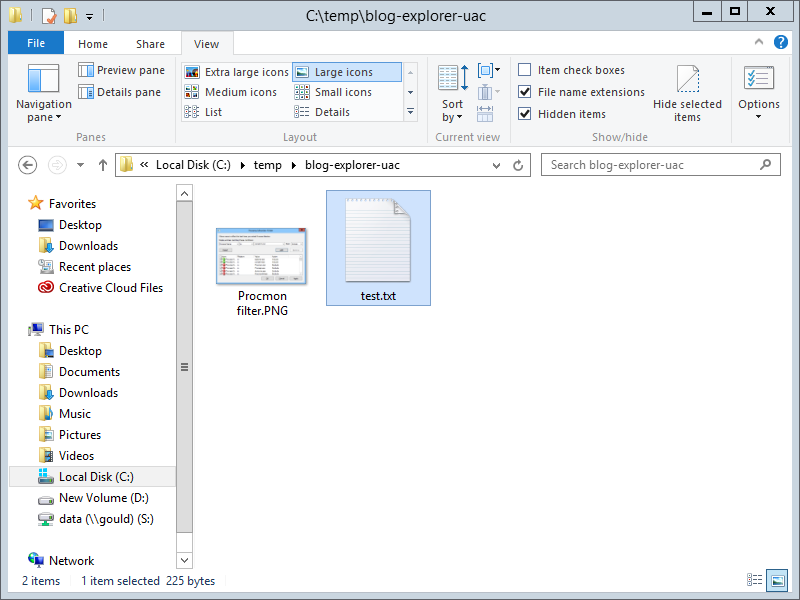

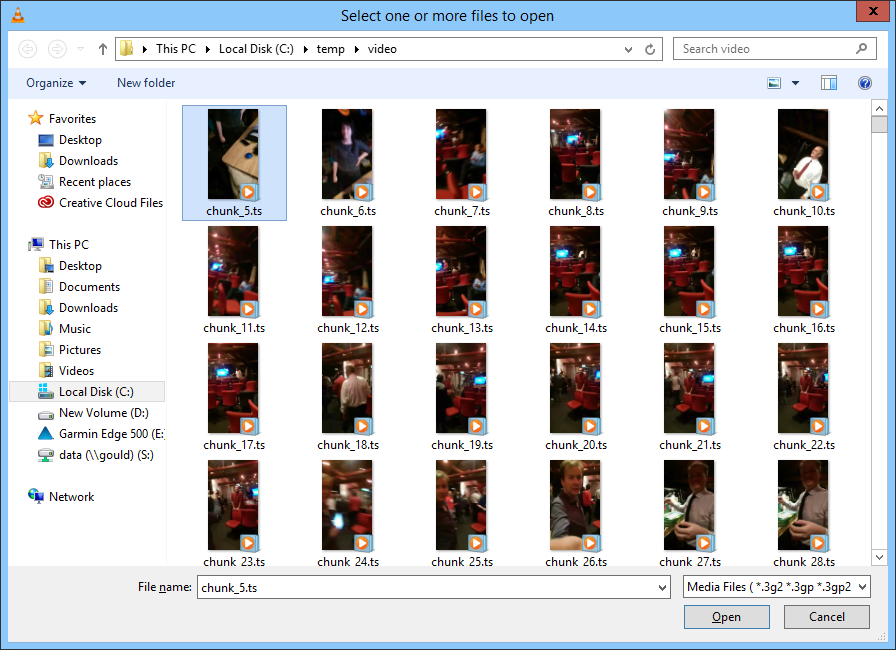

By the time you get back from lunch, the video will be on your computer in hundreds of chunks, each between roughly 100KB and 300KB.

This is no fun. Some video players will load the playlist file and work through the chunks (e.g. VLC), but most of them stutter between the different chunks, which is pretty unwatchable. And it’s a pain to manage.

Combine the chunks into an mp4 video

So I wanted to combine those chunks into a single file for a smooth video experience. I used ffmpeg, which is the most powerful way to do conversions of weird and wonderful video file formats.

To convert these files, this is the command I ended up using (YMMV with that audio conversion parameter, which I didn’t really dig into):

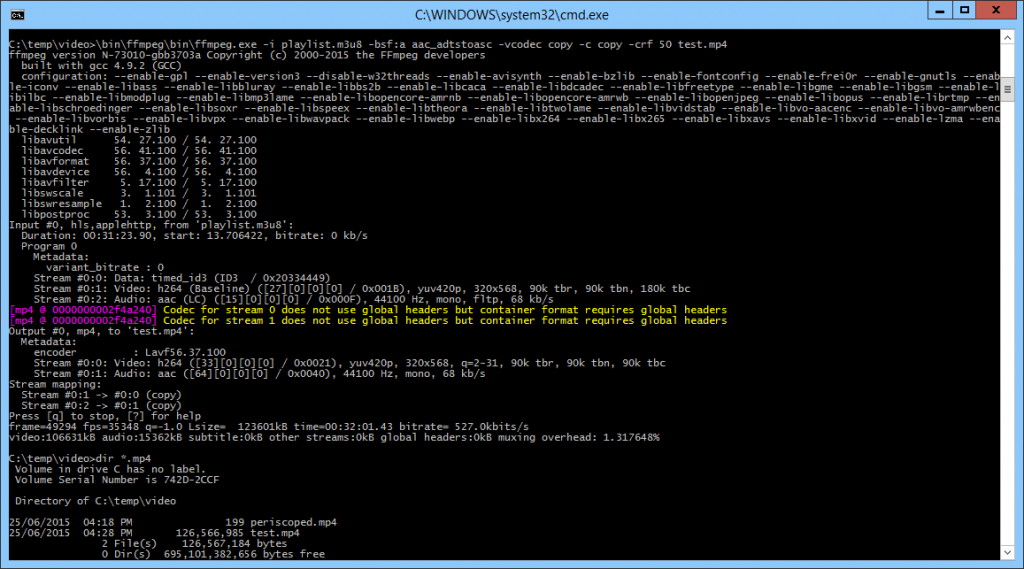

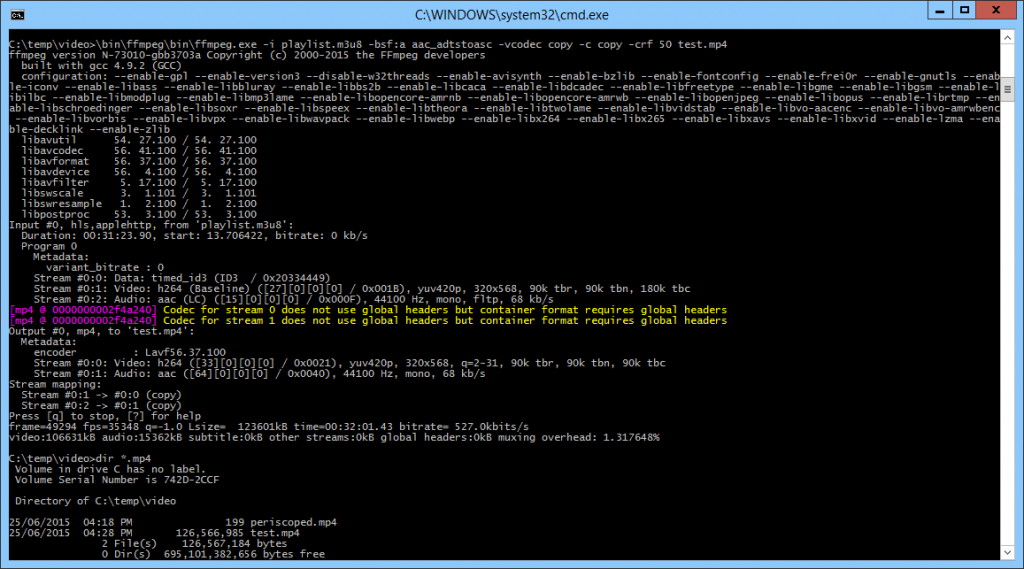

ffmpeg.exe -i playlist.m3u8 -bsf:a aac_adtstoasc -vcodec copy -c copy -crf 50 test.mp4

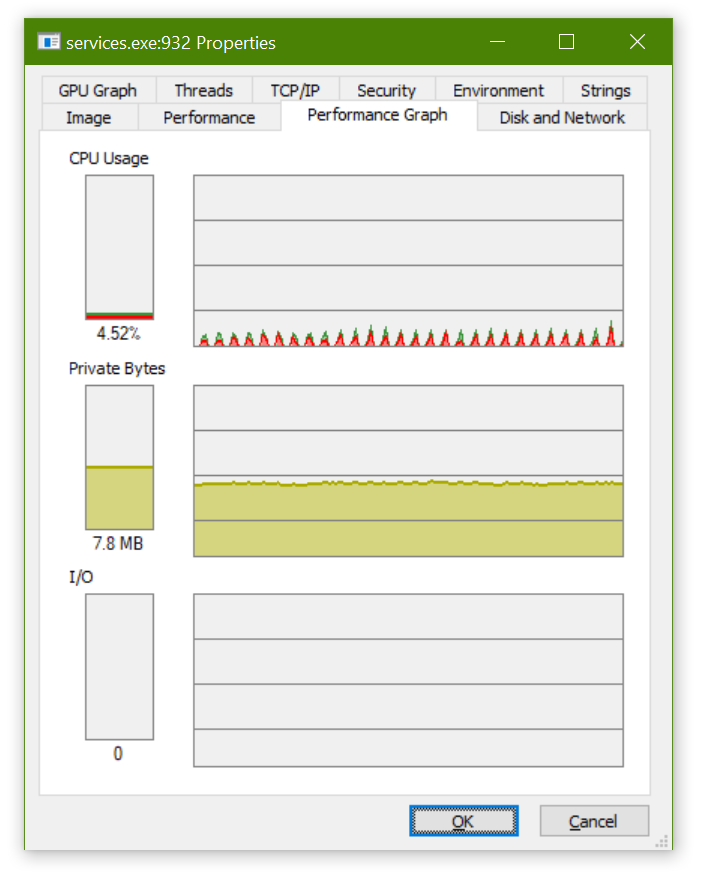

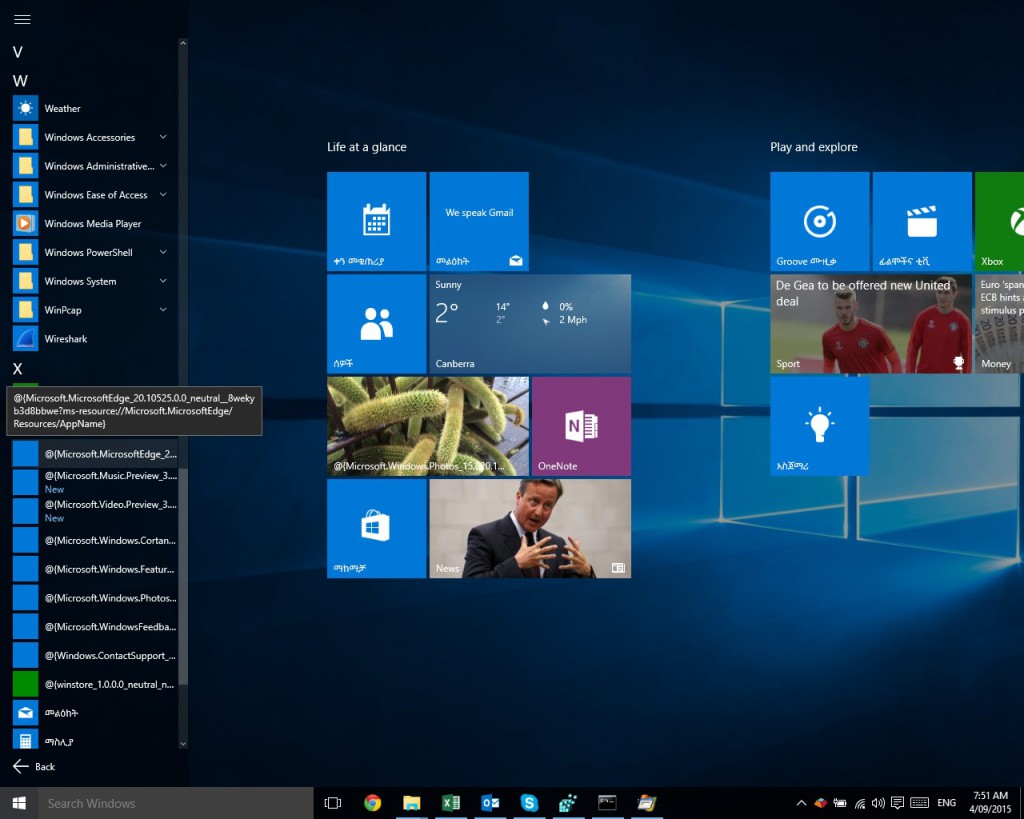

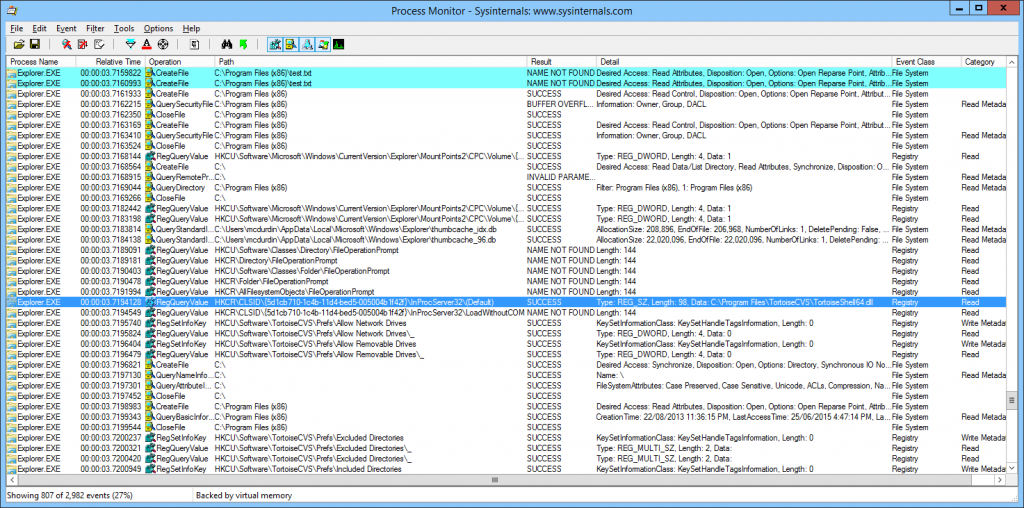

And here’s what I saw:

The good news is that test.mp4 was generated in 2 seconds flat, and plays without stutters!

The good news is that test.mp4 was generated in 2 seconds flat, and plays without stutters!

Fix the audio sync

But I found that the audio was almost exactly 2 seconds ahead of the video, which made things seem pretty weird. ffmpeg to the rescue again!

ffmpeg.exe -i test.mp4 -itsoffset 2 -i test.mp4 -map 0:0 -map 1:1 -acodec copy -vcodec copy test2.mp4

This command breaks apart the audio and video streams, then takes the audio stream and pauses it for two seconds, before recombining it with the video stream. This blog post explains the details of this trick.

Trim the video

Finally, I wanted to save my viewers the agony of watching the camera setup and preparation for the presentation. It was amusing at the event but not so much when sitting at a computer waiting for the real thing to start! So again, ffmpeg made this easy:

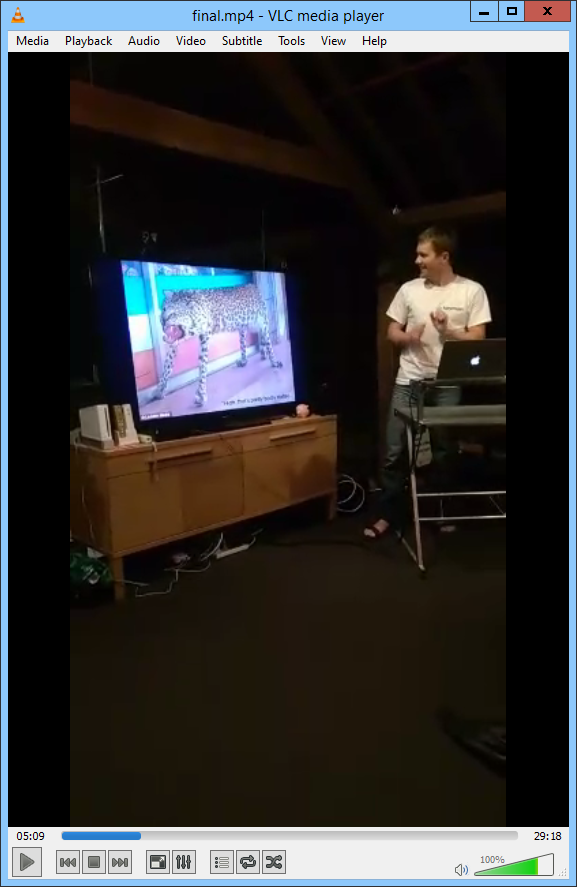

ffmpeg.exe -ss 00:02:45 -i test2.mp4 -acodec copy -vcodec copy final.mp4

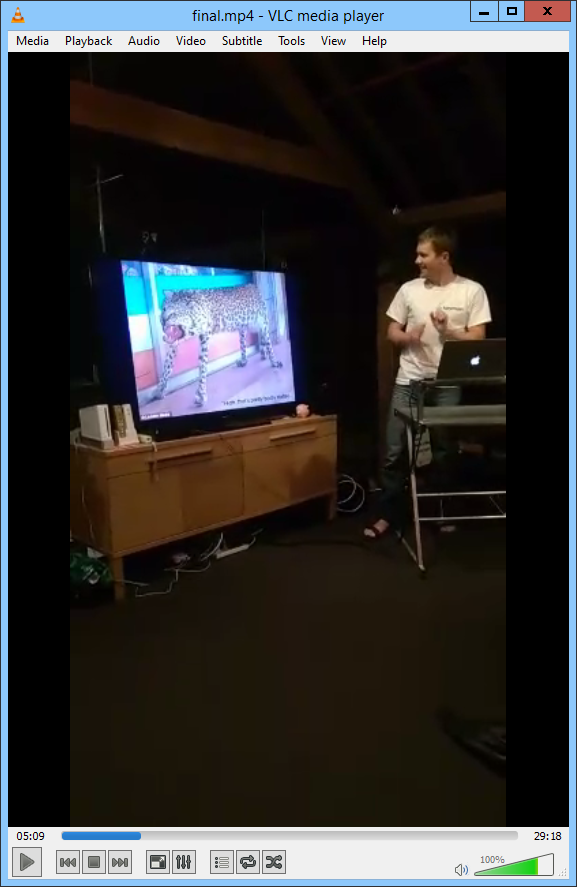

And now we have a final.mp4 video, which works beautifully.

Shame about the guy in the video.

If you do actually want to watch my presentation, you can see all but the last two minutes on Youtube!

http://youtu.be/77pMT3Ogw7g